Local Governments Find Creative Ways to Modernize Their Data Centers

Credit: John Lee

David Newaj, Infrastructure manager, San Joaquin County, Calif.

Like many governments, San Joaquin County, Calif., wants to modernize its data center by making it smaller, greener and cloudier.

This spring, as a number of rack servers reached the end of life, the county IT department upgraded to the latest technology: a converged infrastructure. The new unified computing equipment will allow the county to quickly virtualize its applications and build a private cloud to speed the delivery of IT services. The result will be a fully consolidated, energy-efficient, cost-effective data center that is much easier to manage.

"As budgets have dwindled, we're looking for ways to maintain our level of service to our customers without having to add bodies," says David Newaj, San Joaquin County's infrastructure manager. "One of our biggest pain points is the time it takes to provision servers so our users can do their work. With the Cisco infrastructure for the cloud, users can provision services for themselves."

As the economy recovers, many local and state government IT departments are pursuing long-delayed projects to improve their data center operations, says Deltek Research Analyst Randi Powell. Initiatives include completing existing virtualization and consolidation projects and improving disaster recovery.

"State and local governments move at the speed that their budgets allow," she says. "They've been trying to stretch out the equipment they have for as long as they can, but at the end of last year and this year, we've seen a rebound, and they're moving forward with their postponed projects."

Today, governments have a growing number of data center options. They can build a new data center or overhaul existing facilities with the latest technologies. Alternatively, they can colocate some or all of their data center operations to hosting providers or offload some of their needs to public-cloud or managed-service providers.

What IT leaders choose to do depends on their individual requirements and situations, Powell says. "It really depends on leadership, how much money they have and the kind of philosophy they have."

Optimizing the Data Center, Leading with Virtualization

Faced with all these options, Jerry Becker, San Joaquin County's IS director, chose to upgrade his data center so the county could completely virtualize its servers and build private-cloud services.

During the past five years, the county had virtualized half its rack servers, cutting its physical servers from about 190 to 90. But to virtualize the remaining applications, the county needed to upgrade its infrastructure to ensure it had the server and storage capacity to do so, Newaj says. "We had 30 to 40 servers coming up for refresh, and rather than a one-for-one replacement, we wanted to leverage our money and future-proof our purchases," he says.

In March, the county standardized on 17 Cisco UCS B-Series blade servers, EMC storage area networks, the latest VMware virtualization software, and four Cisco Nexus 5000 Series switches, which upgrades the county's core network speed from 1 gigabit per second to 10Gbps.

The converged infrastructure tightly integrates the components into a shared pool of resources that the IT staff can centrally manage through one software management console called Cisco UCS Director (formerly Cisco Cloupia).

In June, San Joaquin's IT department used Cisco UCS Director to create a cloud service that allows users to spin up their own virtual servers. In the past, developers from county departments had to write up work orders and have the county's IT staff build virtual machines for them, a task that would take an hour of work and three days to fulfill.

"Our users can just go to a portal, click what they want, and within minutes, they have availability of server resources," Newaj says. "It's gone from three days to 10 minutes. It's a huge performance improvement."

The IT staff has been internally testing the cloud service and plans to formally launch it in late September or early October. Next year, the IT staff will offer cloud storage services.

The converged infrastructure, combined with Cisco UCS Director, automates many formerly manual tasks, Newaj says. In the past, when the IT staff built a VM, they had to manually add the VM to a domain and update it with the latest security updates and software patches. Now, it's done automatically, he says.

The county aims to virtualize most of its remaining applications by November. And in doing so, it will improve disaster recovery as IT staff replicate VMs to the county's secondary data center.

Overall, Newaj is impressed with the converged infrastructure. "It provides significant savings in both staff time and operational costs. And users get the resources they need much faster to do their jobs."

Data Center Upgrades Have Positive Side Effects

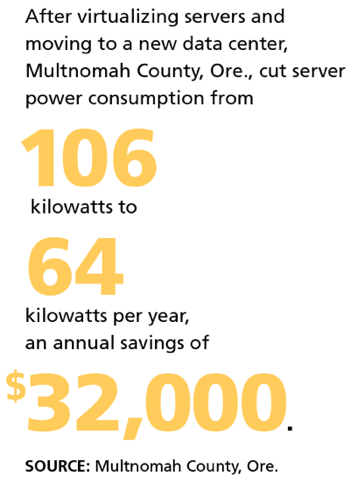

In Multnomah County, Ore., CIO Sherry Swackhamer took a three-pronged approach with her data center strategy: She built a new primary data center, contracted with a colocation facility for disaster recovery and subscribed to commercial cloud services for several applications.

Credit: Robbie McClaran

Credit: Robbie McClaran

Sherry Swackhamer says Multnomah County, Ore., took a sustainable approach in building its new LEED gold-certified data center.

About five years ago, the county started drawing up plans for a new data center because the old one — housed in a 50-year-old retrofitted grocery store — was in bad shape. The computer room's air conditioning and uninterruptible power supply system were at full capacity, and outages were growing more frequent.

"We were living on the edge. It would have taken six to 12 months and millions of dollars to restore operations if there was a major failure," recalls Swackhamer, who also serves as the director of the Department of County Assets.

The county spent 18 months building a new facility that included a $4.3 million data center. County officials moved into the new LEED gold-certified building last year.

Swackhamer timed a necessary IT equipment refresh to coincide with the data center migration. The county purchased nine HP ProLiant DL servers, four Oracle Sparc T-series servers, NetApp FAS3240 networked storage and Cisco Nexus 7000 Series switches.

The IT staff had previously used Hyper-V to virtualize 30 percent of its applications. But before moving to the new facility, Multnomah's IT staff switched to VMware, virtualized nearly all its applications and migrated the VMs and data across the network to the new data center.

Rather than build a secondary data center, the county saved money by using a colocation facility for disaster recovery. The county also migrated some applications to the commercial cloud, including email and calendaring, website hosting and a time-tracking application for the county attorney's office.

Moving forward, Swackhamer believes other government agencies will also use a mix of internal and external data center resources. Colocation and cloud vendors have grown more sophisticated, making them viable alternatives.

"You have to be flexible in your thinking," she says. "You don't know where things are going in the future, so virtualize as much as you can, and use the cloud where it's appropriate."

Earthquake Preparedness in the Data Center

Elsewhere, the city and county of San Francisco's move to shared infrastructure will aid continuity of operations. The city is virtualizing and consolidating its data centers and, this spring, struck a colocation agreement with a state data center to serve as a backup site for disaster recovery, says Marc Touitou, San Francisco CIO and director of the department of technology.

Under a decentralized government, each city department can run and house its own IT equipment. Touitou intends to have departments migrate to one of the city's three main data centers, including one at the San Francisco airport that will open this fall.

With that setup in place, the IT staff can use virtualization to replicate the city's most important applications to the state data center and keep operations running if a disaster strikes. "If we have a nasty earthquake or some kind of long downtime, we will know that our main applications are protected," Touitou says.

Within a year, Touitou expects several large departments to migrate their IT infrastructure to the city's new airport data center, which is built with a mixed server environment that includes VCE's Vblock converged infrastructure.

Once the first departments make the move, Touitou hopes to convince more to join in the shared infrastructure. "We are trying to create an incentive for them by saying, 'We have a new architecture that is more efficient and can lower your costs,' " he says.

Governments Go All In with Outsourcing

While other communities are investing in their own data centers, the commonwealth of Pennsylvania plans to turn completely to the cloud.

The Keystone State plans to consolidate its six existing data centers and hire a provider that will utilize a hybrid private- and public-cloud architecture for the state's server and storage needs, says state CIO Tony Encinias.

Pennsylvania is currently in the request-for-proposal process and hopes to award a contract around January 2014. "We want to buy compute and storage as a commodity and utilize the on-demand model where we pay for what we use," he says.

The move will result in significant cost savings and improved IT services. "The question is, do you really need to own infrastructure and the capital expenditures associated with that? Or do you want to leverage a vendor that can spread the costs of operating a data center to multiple customers, and let them worry about it?" Encinias says. "It was a no-brainer for us. We will be able to do more things at a lower cost."

The Job Is Never Done

Public-sector IT leaders are always looking to improve services while reining in costs. As a result, consolidation is a constant work in progress, says Richard Villars, vice president of data center and cloud at IDC.

"They're constantly having to look at their existing assets — data center facilities, server rooms and closets — that are spread all over an organization," Villars says. "Over time, they are trying to find other ways to consolidate and move to a more centralized facility or shared facilities."

Washington state is doing just that. The state's decentralized model allows individual agencies to manage their own IT environments. During the past few years, however, the state's central IT organization has focused on consolidation, convincing agencies to use one of the state's central data centers, says CIO Michael Cockrill.

As a result, since 2009, Washington has consolidated more than half of its data centers, from 29 to 14, says Rob St. John, director of the state's Consolidated Technology Services. And this summer, Cockrill announced that the state would eliminate one of its central data centers and move that workload to a 2-year-old data center, saving $23 million.

Virtualization and the reduction of physical servers has played a huge role in the consolidation effort. The state is currently at 70 percent virtualization, so there's more room for consolidation and cost savings, St. John adds.

"As we virtualize more, it will lead to a natural evolution of more consolidation," he says.