State and Local IT Teams Use Real-Time Data to Boost Services

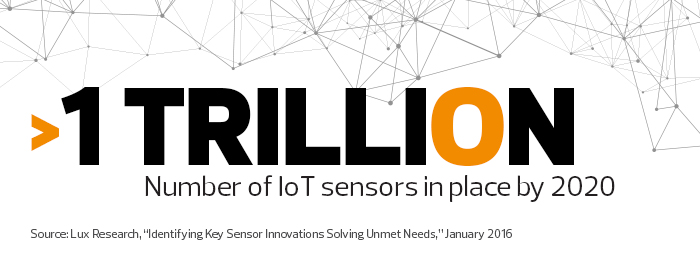

A quickly growing army of sensors is taking position to capture volumes of data as the country transforms into an always-on society. Across a broad swath of industries, instantaneous information is no longer the exception, but an expectation. State and local agencies that harness the potential of that real-time data stand ready to change how government works and delivers services to citizens.

Fort Collins, Colo., for example, has used an extensive fiber network, sensors and live video feeds to gather up-to-the-minute insights about traffic volume. Now, it’s extending the WAN foundation to monitor air quality. Working with a local firm, the city installed a park bench embedded with sensors that use a Sierra Wireless Airlink GX450 gateway to collect detailed environmental information, while also giving people a place to sit and enjoy some urban greenery.

“We’re at an economic inflection point in the clean-energy space that is analogous to the shift from horse transportation to internal-combustion vehicles,” says Sean Carpenter, climate economy adviser for the city. “With the data we’re able to collect, we can ensure we’re with the rise of the automobile rather than being the last buggy-whip manufacturer.”

To enable innovations like these, cities must find ways to fully tap the large volumes of data they’re collecting with the help of reliable wireless and wide area networks. When combined with advanced analytics applications, this trove of information can help planners decide how to spend limited budgets more effectively, meet citizen expectations for new services and promote business development.

Analytics Promote Painless Parking

Another decidedly 21st-century move in Fort Collins is designed to mitigate one of the most common problems for citizens: parking in busy downtown areas. Sensors will send data over WANs to track parking space turnover and availability in a new parking structure and in surface parking lots. The information will feed a mobile application that will notify drivers of available spots and then pay for parking. “Citizens will no longer have to feed a meter, to use a 20th-century term,” says Mark Jackson, deputy director for planning, development and transportation for the city.

Fort Collins officials are also in talks with commercial firms to share some of the real-time traffic information the city collects. One contractor is partnering with the German automaker Audi, which plans to introduce vehicle technology that tells drivers how much time remains before a stoplight changes from red to green.

“Like many communities, we’re just trying to wrap our heads around next-generation transportation questions and policies,” Jackson says. “There are so many permutations and questions. It’s like trying to solve a Rubik’s Cube.”

Kansas Overcomes Pothole Problems with Data

In Kansas City, Mo., officials bring quality analysis to benefit from the large quantity of data they are collecting. “We didn’t realize how much we actually knew about ourselves until we looked deeper into the 4,200 data sets the city already maintains,” says Bob Bennett, chief innovation officer for the city.

Case in point: the age-old problem of potholes. Every year, Kansas City allocates about $4 million for road upkeep, which is only about half of what it costs to fully meet the city’s needs, Bennett says.

To cope with limited resources, the city uses data to predict where potholes are most likely to develop, and targets spending to those areas. Key data points include the dates and contractors for previous resurfacing projects, traffic volume and 311 reports. To collect relevant data, hundreds of Cisco Systems wireless access points were installed, along with traffic sensors that capture the weight and number of vehicles rolling through downtown.

The city merges this information with statistics showing block-level changes in temperature and precipitation rates supplied by public and private organizations. An analytics application then crunches the combined data. “We’ve discovered that potholes are likely to develop in vulnerable places 77 days after high precipitation and a freeze/thaw cycle,” Bennett says, adding that the data model predicts the location of potholes with about 85 percent accuracy.

This information has broad financial implications. Instead of spending significant amounts of money for relatively short-term repairs to newly reported potholes, the city is earmarking funds for preventive maintenance using materials rated to last 15 years. Over time, this may shrink the city’s funding gap. “We anticipate that instead of having a bill for $8 million, predictive maintenance will reduce the annual need to about $6 million in three to five years,” Bennett explains. “We hope that in 10 years, our maintenance bill will be down to about $4 million,” which matches the current allocation.

AI Promotes Healthier Communities

The Minnesota Pollution Control Agency is feeding real-time weather data into an analytics program using artificial intelligence to promote the health of citizens, particularly those with respiratory troubles. This effort produces daily air quality forecasts, as well as alerts when necessary.

“Our biggest events in the past five years have been related to wildfire smoke coming from the western U.S. and various parts of Canada,” says Steve Irwin, an air quality meteorologist with the agency. “Until recently, there was no way for many citizens to know that their health might be impacted.”

In the past, forecasts covered only the large urban areas of Minneapolis/St. Paul and Rochester. Officials wanted to expand the analyses to encompass the entire state, but limited staffing made this impossible until the agency found a way to automate much of the process using AI.

MPCA staff wrote a program for automatically collecting the relevant weather data from a variety of online sources, including the National Weather Service and universities. The AI program, also developed in-house using open-source tools, then crunches the numbers to develop the baseline analyses, which are then reviewed and enhanced by the agency’s three meteorologists.

“One of the goals is to create a proactive program, so if an analysis determines an alert condition, we can let sensitive populations know in time to change their behavior,” says Ruth Roberson, supervisor in the agency’s risk evaluation, modeling and air quality forecasting unit.

Alerts appear in Department of Transportation signs on highways, in social media and in a mobile application created by the state.

“This project shows us the benefit of learning from our data,” Roberson says. “In the future, we’ll be looking for ways to apply analyses like these to other areas in our agency.”