Today, state government IT teams perform procedures such as anomaly and threat detection at scale thanks to AI solutions, says Randy Rose, vice president of security operations and intelligence at the nonprofit Center for Internet Security.

“The biggest win from an AI perspective is being able to find low and slow attacks with a much higher efficiency,” Rose says. “machine learning can find things that are really hard to detect because there’s a long period of time between one part of the attack and another, and they can do it much more easily than humans can.”

AI Detection Can Quickly Spot and Report Issues

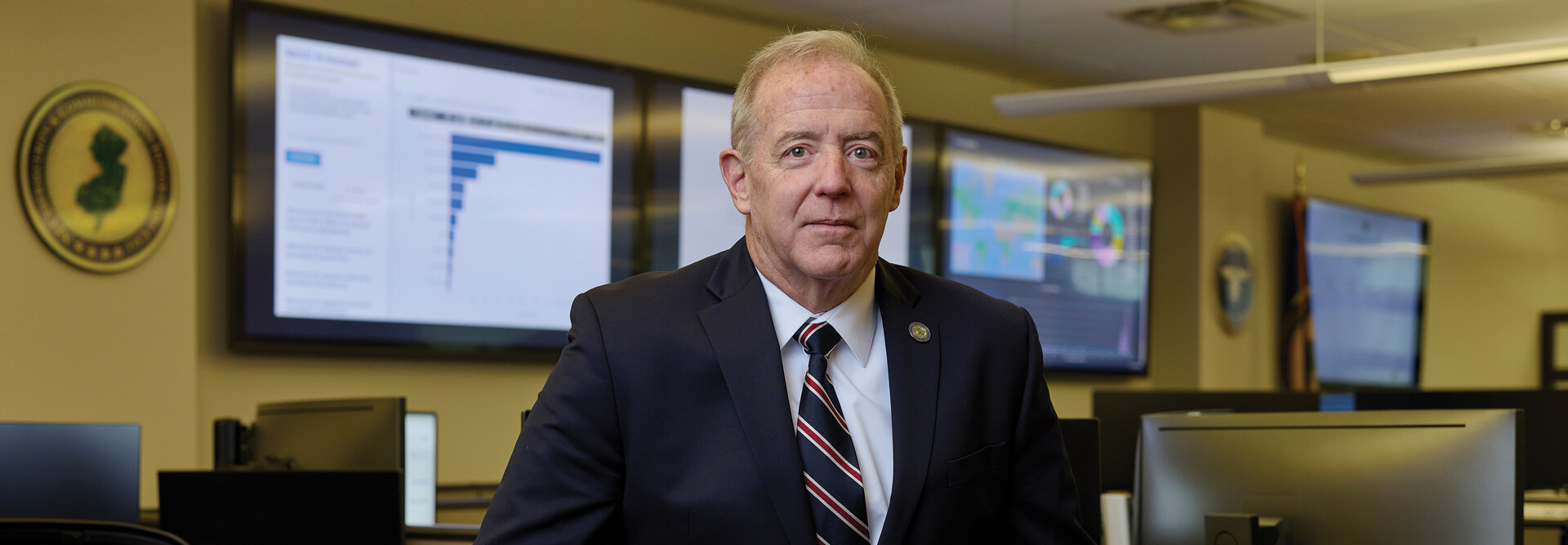

The New Jersey Cybersecurity Communications Integration Cell has used the Google SecOps security information and event management system, which can analyze security data from across an organization’s environment, for about five years as part of its cybersecurity approach, Geraghty says.

NJCCIC has also deployed Google’s BigQuery data platform, CrowdStrike’s endpoint detection and response (EDR) solution and Palo Alto Networks’ next-generation firewall.

“They’re all using AI to some degree,” Geraghty says. “The days of using signatures that are very brittle to detect malware or phishing emails are over. Today, we have endpoint detection and response, which is behavior-based and deals with a lot of machine learning AI models.”

NJCCIC cybersecurity professionals employ AI functionality to quickly review lengthy technical reports and other content.

“In Congress, you might have a proposal that’s 1,500 pages long,” Geraghty says. “It would take quite some time for somebody to read that. Allowing a generative model to pull out key facts and summarize it is really helpful.”