Fact or Fallacy: How State and Local Agencies Can Maximize Big Data

The potential advantages of Big Data read like a most-wanted list for any agency with aims to innovate: increased transparency, collaboration and citizen engagement. By analyzing Big Data, leaders lean less on subjective factors and instead measure the success of programs with objective evidence to prove value to the community.

While Big Data opportunities are exciting, citizens, elected officials and regulatory bodies will scrutinize every dollar spent. As with any technology, government must ensure a business intelligence deployment is cost-effective.

To realize the most gains from Big Data, sift through the hype and uncover reality.

Fact: Big Data Means More Than Just a Lot of Data

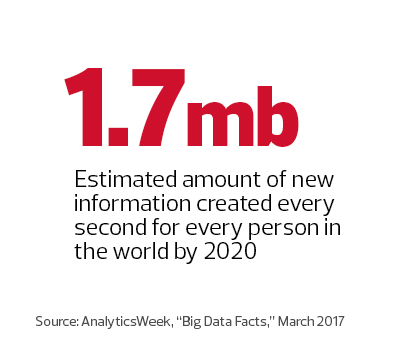

While there’s no question the term Big Data refers to large data volumes, the broader concept is much more complex. Big Data also refers to how structured and unstructured data sets combine with other information — for example, IoT data — to paint a more in-depth picture. By applying advanced analytics, analysts discover patterns and anomalies, recognize relationships and test predictions that help them make more informed and actionable decisions.

Big Data also embraces the analysis of data flows on the fly by confirming assumptions or uncovering unexpected correlations, and allowing adjustments in real time. That’s a game-changing advance over the traditional method of users analyzing data periodically to confirm assumptions. Simply running reports at the end of a quarter will not address time-critical issues central to government functions, such as public health, disaster prevention and emergency response.

Fallacy: Human Analysts Will Become Unnecessary

Analytics tools have become so advanced that there’s a tendency for users to automatically trust their output. Today, an array of on-premises and cloud-based analytics platforms and data plug-ins allow government IT staff and analysts to perform data modeling for specific projects. They disseminate those models to a range of users, who ask questions and correlate output in user-friendly dashboards.

Savvy IT leaders should never underestimate the importance of the human component when translating analytical findings into meaningful actions. In fact, even when they hire data scientists who can build predictive models for complex projects, decision-makers still require the domain expertise of their most experienced employees. Those workers can determine whether findings make sense based on the experience they have in disciplines such as accounts receivable, logistics or urban planning.

Fact: Big Data Projects Must Protect Individual Privacy

Major breaches at all levels of government have compromised personally identifiable information and protected health data, and agencies face increased pressure — as well as regulatory mandates — to better protect citizen and employee privacy and personal data.

Those pressures are driving departments to reconsider the personal data they collect, and whether they should collect such data at all. They must evaluate all personal data they may combine with logistical, geospatial, educational, regional and other information, whether conducting straightforward descriptive analytics or building complex prescriptive or predictive analytical models.

Fortunately, newer analytics technology integrates capabilities to help enforce data governance, risk management, privacy and security management policies. Agencies can further protect information in Big Data projects through techniques such as data minimization, de-identification, differential privacy and salting.

Fallacy: Organizations Don’t Need to Know What Data They Have

Government entities must account for the data they store and analyze. To gain and maintain trust, agencies should first understand the structured and unstructured data housed in databases, email and other systems. They can then gain a better handle on such rich data sources as social media streams, mobile phone communications, and video and other information they capture from the scores of connected devices that comprise government-managed infrastructure.

Today, many local government entities — and smaller agencies in particular — don’t have a firm grasp on the data on hand, or its value. To improve visibility, a growing list of vendors provide data discovery, inventory and classification products. Also, data-flow mapping tools protect organizations by helping security professionals see where data moves throughout their agencies, and who uses it.

Fallacy: Big Data Is Too Big to Take On

Given all of the moving pieces, Big Data projects aren’t easy, but their promise is great. By understanding the benefits and the possible pitfalls, state and local governments can play a more proactive role in transforming their communities.

Start with the data on hand, and coordinate with stakeholders to agree on a plan. Identify the information that should be analyzed, state clear goals and objectives, and define metrics to allow team members to measure progress. Effectively secure the data used and share it with partner agencies. Where appropriate, open it up through community portals to foster citizen engagement and crowdsourcing. Based on proof-of-concept projects, teams can continuously build on efforts to create smarter cities and communities.